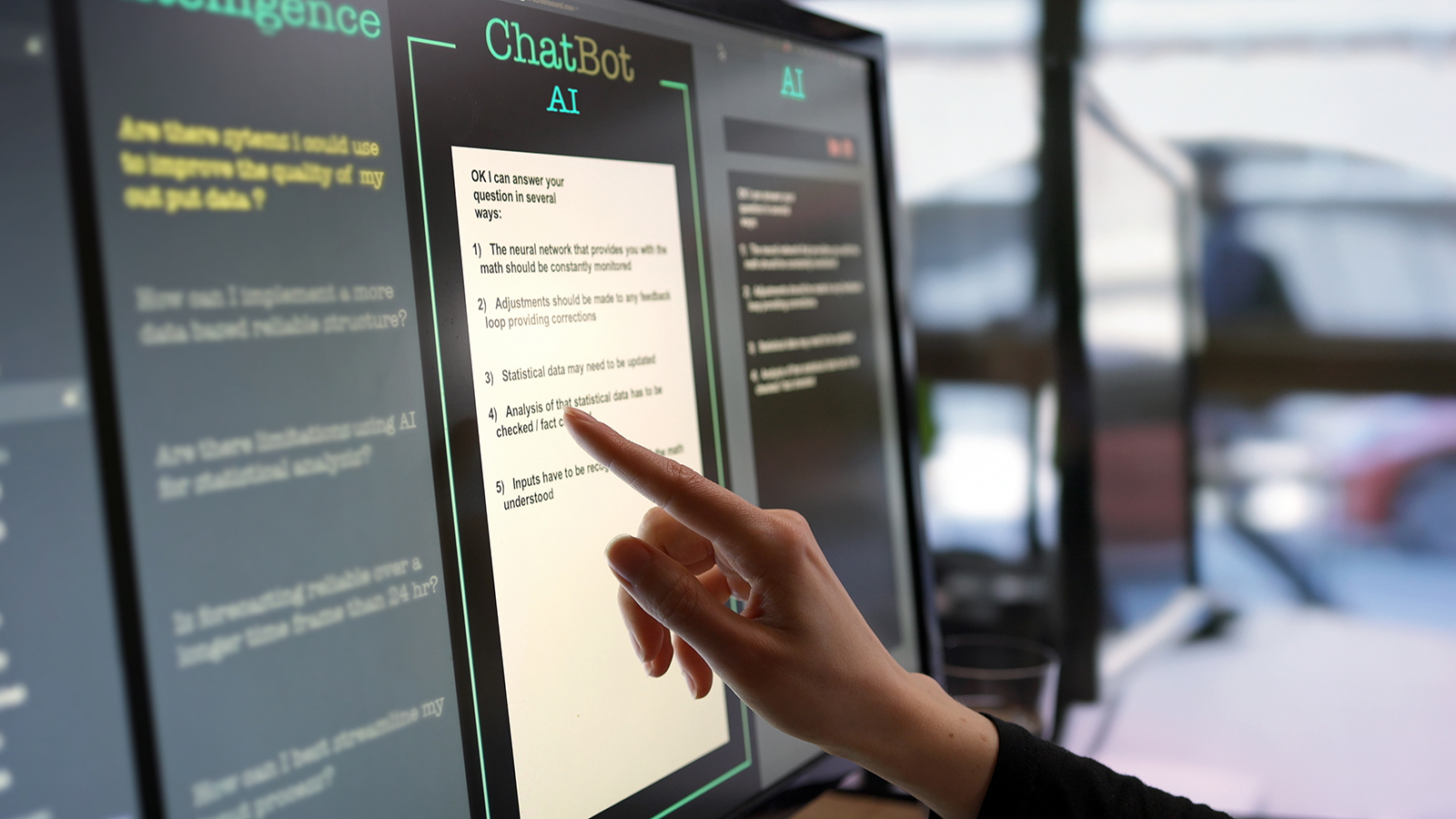

Large language models (LLMs) like ChatGPT have vast potential in the financial sector. It’s a classic scenario of technology optimizing human work, not replacing it.

These artificial intelligence models can be harnessed to handle routine queries in customer-facing roles, streamlining customer service efficiency and response times. They also excel behind the scenes, processing and analyzing lengthy financial documents, regulatory guidelines and complex contracts. By distilling this information into accessible insights, they empower decision-makers to make informed choices.

Continuous education is also facilitated, as finance professionals can leverage AI models to stay updated with the latest industry developments.

Dasera’s Ani Chaudhuri: “Tremendous advantages” of a governance framework.

While the benefits of AI language models are significant, it's crucial to address the security and privacy challenges they bring when utilized in the financial sector. One of these challenges involves inadvertent information leakage, as AI models might generate sensitive or confidential information based on their training data.

Training, Design and Auditing

To mitigate this risk, robust data governance practices are paramount. Establishing clear guidelines on data access, specifying the data AI models can be trained on, and implementing checks to ensure confidential information is not included are essential steps.

Privacy by design is another crucial aspect. AI systems must be designed with privacy as a core consideration. Techniques such as differential privacy can be employed to ensure that training data cannot be reverse-engineered, protecting sensitive information.

Regular audits of AI systems are necessary to maintain data integrity and detect potential data leaks or misuse. These audits should be thorough and conducted at frequent intervals. Additionally, organizations must strengthen their overall cybersecurity framework to reinforce the security around AI systems.

Cybersecurity measures, such as robust authentication protocols and encryption, should be implemented to safeguard AI systems and the data they process.

Post-deployment monitoring ensures that AI models' outputs do not compromise sensitive information. This ongoing monitoring allows timely detection and remediation of potential risks or vulnerabilities.

Responsible AI Culture

Furthermore, training employees about the capabilities and limitations of AI systems is essential. This education empowers them to make the most of AI technologies while being aware of potential risks and challenges.

By fostering a culture of responsible AI usage and creating awareness about data security and privacy, organizations can mitigate potential pitfalls and enhance the overall effectiveness of AI implementation.

While AI language models like ChatGPT offer tremendous advantages in the financial sector, adopting a comprehensive governance framework is crucial.

In summary, by adhering to these best practices, organizations can leverage the power of AI while ensuring the utmost security and privacy.

- Robust Data Governance. It's imperative to establish strong data governance practices. These should define who can access the data, what data the AI can be trained on, and what checks are in place to ensure no confidential data is included.

- Privacy by Design. AI systems must be designed with privacy at the forefront. Techniques like differential privacy can help ensure the training data can't be reverse-engineered.

- Regular Audits. Frequent and thorough audits of AI systems can help detect and rectify any potential data leaks or misuse.

- Cybersecurity Measures. Strengthening the organization’s overall cybersecurity framework will reinforce the security around AI systems.

- Continuous Monitoring. These models should be monitored post-deployment to ensure their outputs do not compromise sensitive information.

- Training. Finally, employees should be educated about the capabilities and limitations of these AI systems to prevent misuse and ensure they understand the potential risks.

AI systems have tremendous potential, but only by facing and overcoming these challenges can we reap the benefits they promise while ensuring the utmost security and privacy.

Moreover, it's crucial to manage expectations. While ChatGPT can augment the work of human employees, it is not a silver bullet. Organizations must be mindful of this, balancing the implementation of AI with a commitment to maintaining the “human touch” in their operations.

Let's not see technology as a threat, but rather as an instrument for achieving our ambitions more effectively. The finance sector has continually evolved with technology, and significant language models like ChatGPT are merely the next step in that journey.

Ani Chaudhuri is co-founder and chief executive officer of data security company Dasera.

Topics: Responsible & Ethical