For Ibrahim Dusi, chief risk officer for the Americas at Revolut, artificial intelligence and machine learning “are an important tool in our toolkit” for fighting fraud or identifying bad actors.

These technologies, he says can also help in reducing costs, increasing efficiency, providing a friction-free customer experience, reducing bias, and speeding creation of risk models.

“In my opinion, it’s a game-changer if used correctly,” says Dusi, who joined Revolut, the U.K.-based “super app” financial services company, in February after prior experience with fintech lender Happy Money and Capital One.

He is not alone in his AI/ML enthusiasm.

McKinsey & Co.’s December 2021 global, multi-industry State of AI survey found artificial intelligence technology – which includes machine learning, computer vision and natural language processing – to be on the rise in a host of business activities. They include operations optimization, risk modeling, fraud and debt analysis and other applications of analytics.

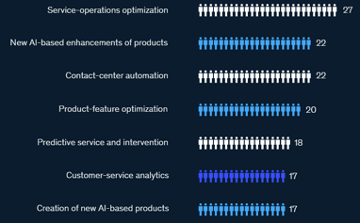

Most commonly adopted AI use cases (percentage responses in McKinsey survey)

Most commonly adopted AI use cases (percentage responses in McKinsey survey)

“AI adoption is continuing its steady rise: 56% of all respondents report AI adoption in at least one function, up from 50% in 2020,” the report says. “Nearly two-thirds say their companies’ investment in AI will continue to increase over the next three years.”

In the Forefront

The banking sector is ahead of other industries in AI and ML adoption, according to the Anti-Fraud Technology Benchmarking Report from the Association of Certified Fraud Examiners (ACFE) and advanced analytics firm SAS. This is due in large part to banking’s data-intensive nature and the ongoing push to identify patterns and glean insights from massive amounts of fraud-related data.

The 2022 survey of nearly 900 fraud examiners worldwide found that 31% in banking are currently employing AI/ML technology in anti-fraud efforts – up from only 19% in 2019 – with another 27% anticipating adoption over the next one to two years.

An anticipated 150% leap in AI adoption across all sectors over the next two years “signals that organizations aren’t resting on their laurels,” says ACFE director of research Andi McNeal. “The landscape could look quite different when we survey in 2023.”

“Artificial intelligence and machine learning technologies are being used to address risks that both customers and financial firms face,” says Stu Bradley, vice president of fraud and security intelligence at SAS.

Stu Bradley of SAS

This can involve thwarting first- and third-party fraud and driving operational effectiveness in internal fraud and risk operations, Bradley notes, adding: “When we look at discrete areas where AI/ML technology is being leveraged for risk management, you would include instances where AI facilitates the analytics that can then permit payment authorization, digital authentication and identity proofing during the application process, and for fraud investigations that require the use of text mining to cull through unstructured data to then pull out insights.”

AML and Other Benefits

AI/ML technology can potentially be applied to an even broader array of financial sector activity. This includes anti-money laundering efforts, compliance data analysis, credit decisioning, document scanning and processing, cyber threat analysis and prevention, and cybercurrency transaction monitoring.

In a March 10 announcement expanding on collaborative initiatives announced in August, EY and Microsoft said they will bring together technologies including machine learning and natural language processing to “help businesses’ legal and compliance teams to identify and mitigate ethical and reputational risks; investigate and manage regulatory challenges; detect fraud; and manage privacy and security risks.”

Charles Delingpole, founder and CEO of ComplyAdvantage, a provider of financial crime detection technology using machine learning and vast quantities of data, says that a primary factor fueling demand and deployment of AI/ML is return on investment.

“The real cost of fighting crime at financial firms is not the technology and it’s not the data,” he says. “It’s the human cost of labor like the armies of analysts who are now no longer needed, thanks to AI technology.”

A second impetus is the enormous cost of fines levied when financial firms fail to meet compliance requirements, and a third is the ongoing boom in regulation technology, or regtech, investments and innovation.

No longer having to build AI/ML systems themselves, firms can readily purchase them, and they see the technology embedded in products from established vendors and more easily integrated, Delingpole says. This at a time of widespread and worsening fraud and ransomware, as delineated in ComplyAdvantage’s State of Financial Crime Report released in February.

Dusi of Revolut points to the fact that “we are living in an era of instant gratification. Everyone expects instant results and a better customer experience,” while risks continue having to be managed.

Black Box Problem

However, one issue is seen as constraining even broader use of artificial intelligence in parts of the financial sector, what Anthony Mancuso, global head of risk modeling and decisioning at SAS, terms “challenges around explaining AI and ML models, and this feeds into regulatory concerns.” Explainability concerns, especially with so-called black box models that regulators scrutinize and may reject, deter many from pursuing or experimenting with AI, he says.

Mancuso believes this will continue until authorities provide more concrete guidance on “how you should and should not use these models.” As a result, he notes, there is a concentration on applying AI technology to more straightforward business cases in identifying patterns or anomalies in data, rather than models engaged in more complex and, in turn, opaque processes.

“You can run the risk with machine learning algorithms of skipping steps that you should not have skipped, or making the wrong decision,” and thus it is critical to manage AI/ML risks and ensure model explainability, Mancuso adds.

Soups Ranjan of Sardine

Authenticating and Vetting

The emergence of new AI/ML companies indicates where innovation appears to continue unabated. Among such start-ups are: Sardine, which relies on AI for real-time fraud scoring based on a user’s identity and behavior patterns taken from tens of thousands of data points; Mirato in third-party risk intelligence; and Vartion, with AI-powered compliance and risk decision support.

Sardine co-founder and CEO Soups Ranjan says that while he views behavior-based risk scoring as truly innovative, he is keen on his next project: a biometric-based facial recognition system for identity authentication on mobile phones. “How you hold the phone, swipe and scroll along with a combination of other factors will create a new type of identity profile that cannot be spoofed,” he explains.

Revolut’s Dusi is looking forward to AI aiding in vetting potential customers, and thus promoting financial inclusion in underserved populations. “This can be achieved by processing alternative sources of data,” which AI can facilitate, he says.

Mancuso of SAS is similarly upbeat about the future for AI, but, he says, “Risk managers will need to have more agile systems to help them keep track of AI/ML technology and will have to adjust their thinking about how to manage risk. The new focus will be on explainability, traceability, and they will need to have confidence that all the new metrics do make sense.”

Katherine Heires is a freelance business writer and founder of MediaKat llc.