Artificial intelligence is turning the worlds of technology, business and government on their heads. All face strategic conundrums as, with ever-increasing power and visibility, large language models and generative AI spread faster than operating rules can be set or ethical guardrails constructed.

There is general agreement about those needs. Tech industry leaders are urgently advocating regulation. Sam Altman, chief executive of the ChatGPT developer OpenAI, has joined with many others equating the risks with those of pandemics and nuclear war.

The European Parliament set out to be the first in the world with wide-ranging legislation mandating human oversight of AI innovation and risk-based restrictions on deployment. The U.S. Congress authorized a National Artificial Intelligence Advisory Committee (NAIAC) to advise the President, and the Biden administration has committed to “responsible American innovation in artificial intelligence” and outlined a Blueprint for an AI Bill of Rights.

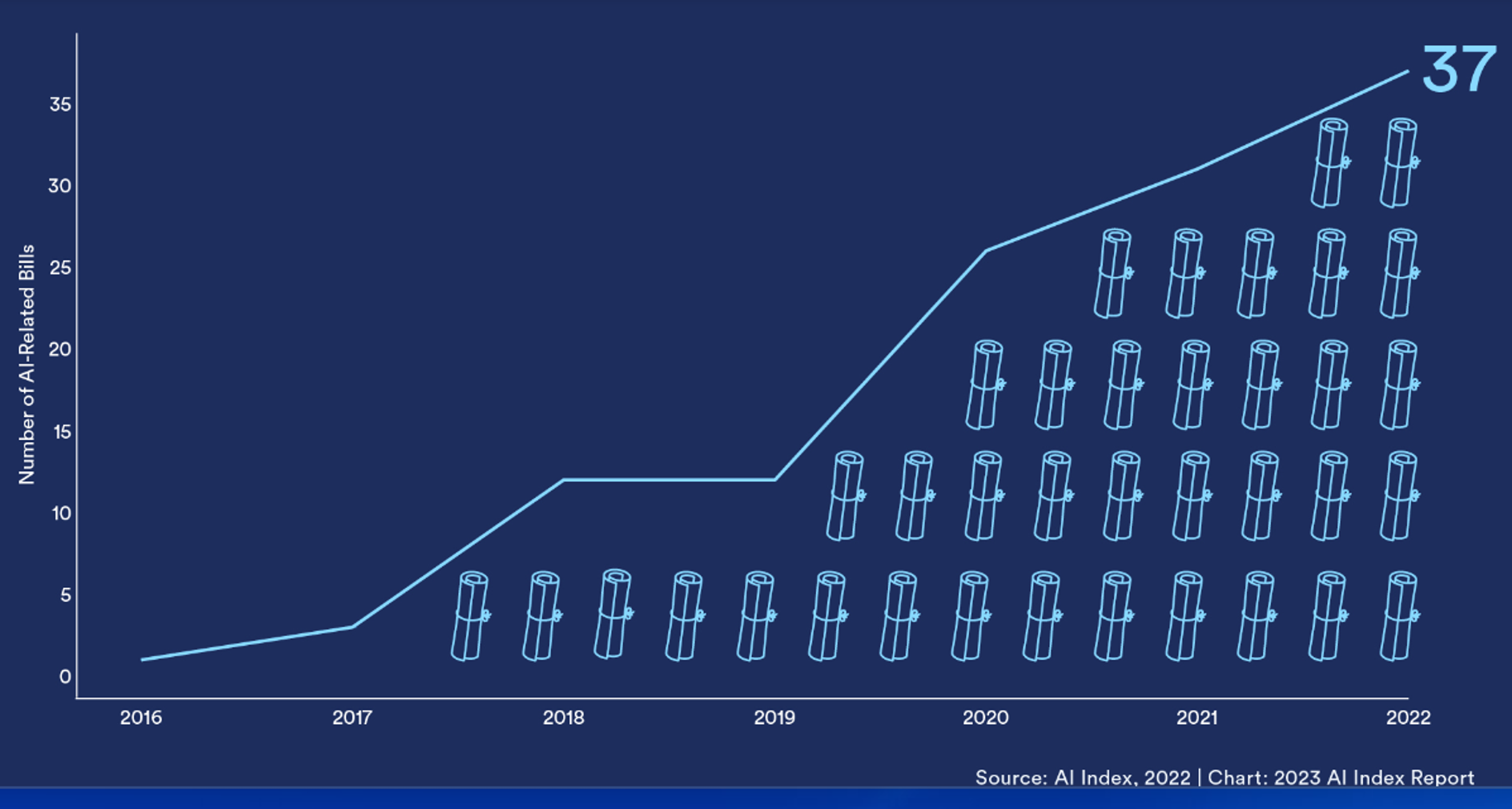

Although legislative momentum has gradually been building around the world (see graph), it will take time for significant laws to be enacted and formally implemented. As a result, regulated sectors such as finance and health care must maintain compliance with legacy rules while also pushing the innovation envelope.

Stanford University’s Institute for Human-Centered Artificial Intelligence (HAI) tallied the number of AI-related bills passed into law globally.

Banks may have a taste of what is to come, from their experience with model risk management and anti-bias provisions. The European Union’s AI Act would allow for regulatory sandboxes, or protected zones for experimentation, similar to programs that the U.K. Financial Conduct Authority and other agencies established over the last decade to foster fintech innovation.

The Monetary Authority of Singapore, which stands out among central banks and regulators as an innovation booster – including through sandboxes – is partnering with Google Cloud “to advance the development and use of responsible generative AI applications” in its digital services. “Through this, we hope to inspire greater adoption of responsible generative AI in the financial sector,” assistant managing director (technology) Vincent Loy said on May 31.

Chatbot Alert

On the AI frontier, the U.S. Federal Trade Commission “will vigorously enforce the laws we are charged with administering, even in this new market,” chair Lina Khan vowed in a New York Times guest essay.

AI tools “being trained on huge troves of data in ways that are largely unchecked” can unfairly lock people out “from jobs, housing or key services,” Khan warned. “Existing laws prohibiting discrimination will apply, as will existing authorities proscribing exploitative collection or use of personal data.” The FTC is on guard against the technology becoming concentrated in a small number of dominant firms or engendering “collusion, monopolization, mergers, price discrimination and unfair methods of competition.”

AI also risks “turbocharging fraud . . . Chatbots are already being used to generate spear-phishing emails designed to scam people, fake websites and fake consumer reviews – bots are even being instructed to use words or phrases targeted at specific groups and communities,” Khan wrote.

Citing a rise in customer complaints, the Consumer Financial Protection Bureau on June 6 published an “issue spotlight” on chatbots. “A poorly deployed chatbot can lead to customer frustration, reduced trust and even violations of the law,” said CFPB director Rohit Chopra.

The Securities and Exchange Commission’s Investor Advisory Committee in April laid out for SEC Chair Gary Gensler “perspectives on the importance of providing ethical guidelines for artificial intelligence and algorithmic models utilized by investment advisers and financial institutions.” Gensler said on June 13 that AI, machine learning and predictive data analytics are being considered in the context of proposed brokerage conflict-of-interest rules that the agency is working on.

Deeper into Modeling

Reggie Townsend, a NAIAC member who is director of the Data Ethics practice at analytics solutions leader SAS, says that according to his Risk Research and Quantitative Solutions colleagues, AI is ‘increasingly being used in risk model development, especially for behavioral models.”

"Reggie Townsend of SAS: “You can’t shortcut the data.”

For example, in engineering, “firms are using AI to find previously unrevealed relationships,” Townsend says. “They are also using more data sources, including alternative data, to power these findings. The variables discovered through AI, however, are still being put into traditional regression techniques.”

In addition, AI-derived models are used for benchmarks in challenging traditional models. AI is also “very good at anomaly detection and often used for data quality – both input and output,” Townsend adds, as well as for early warnings when borrowers are in credit distress, when proactive customer engagement can prevent losses.

As Big Techs pour billions into AI initiatives (including Microsoft’s reported $10 billion investment in OpenAI), and Accenture this month anted up $3 billion, SAS announced in May that it will invest $1 billion over three years to develop advanced analytics solutions for multiple sectors using the cloud-native, massively parallel SAS Viya platform. The investment includes direct research and development, industry-focused line-of-business teams, and industry marketing efforts, and “is in line with the trend toward democratizing data analytics,” the company said in its announcement.

“Businesses face many challenges, from the threat of economic recession and stressed supply chains to workforce shortages and regulatory changes,” said SAS CEO Jim Goodnight. “With insights from industry-focused analytics, resilient organizations can find opportunity in these challenges. “Through this investment, SAS will continue to support companies using AI, machine learning and advanced analytics to fight fraud, manage risk, better serve customers and citizens, and much more.”

Machines versus People?

“Whose vision is driving AI? . . . What is the dominant narrative?” asked Massachusetts Institute of Technology professor Simon Johnson at a June 12 Brookings Institution event. “It’s about – I think – machines replacing humans.”

Co-author with Daron Acemoglu, also of MIT, of Power and Progress: Our Thousand-Year Struggle Over Technology and Prosperity, Johnson sought to put AI in that historical perspective. Replacing people, whether to win at chess or check out at supermarkets, “is far too skewed in one direction and one tradition of computer science,” said Johnson, who gained renown for his post-Great Financial Crisis book 13 Bankers, written with James Kwak, and is co-chair of the CFA Institute Systemic Risk Council.

Johnson added: “The other direction, which we prefer, is one in which you actually design machines and tend to machines to help humans, to augment human capacities and capabilities. If you do that, and change the vision, we argue you will be happier with the outcomes in terms of the jobs you create.”

Investment risk analytics entrepreneur Richard Smith, now chairman and executive director of the Foundation for the Study of Cycles, said in a LinkedIn post, “When it comes to new generative AI technologies like ChatGPT, there’s a disconnect between what people think the new technology is and what it actually is. That’s already led to the early stages of bubble formation.”

Generative AI software revenue will rise almost tenfold, to $36 billion, in 2028, according to S&P Global Market Intelligence.

On the whole, Smith tells GARP Risk Intelligence, generative AI is “going to improve productivity. It’s going to improve margins. I think that risk professionals absolutely will integrate AI into their practices, just like we integrated computers and data into our practices.

“Those risk professionals that really spend the time to invest in this technology, and to use it responsibly and in a measured way, will definitely succeed. The technology really can produce some extraordinary results, some extraordinary outputs.”

“Humans can’t monitor or analyze large volumes of data very quickly or effectively,” says SAS’s Townsend. “That’s where AI excels. Risk analyses that used to take days can be done in a fraction of the time, helping banks manage risk in near real time.

“AI’s automation capabilities will prove to be a real game-changer for risk managers in more accurately assessing credit risk, doing more expansive stress testing, and better detecting fraud and financial crime, for example,” Townsend continues. “That’s also why it’s so critically important to get the fundamentals right. You can’t shortcut the data, models or processes.”

Trust and Anti-Discrimination

On the downside, “In a heavily regulated industry like financial services, jumping into the current large language model craze might be taking on too much risk,” Townsend cautions.

He notes that “there are proven technologies, with trustworthy AI capabilities built in, that will provide better peace of mind while still providing a competitive edge.”

There must be sensitivity to how “minority populations have been impacted by laws, social norms and business practices, many of which were discriminatory at one time in our history,” Townsend adds. “Unfortunately, many disparate outcomes still exist, primarily because those laws, norms and practices have a long tail effect, and encoding those same laws, norms and practices into our digital lives will only intensify them.”

Lending or anti-fraud models trained on historical data can perpetuate various biases, not least the redlining practices that continue to haunt property valuation and mortgage decisions. Says Townsend, “It’s vital to anticipate and remediate these risks, injecting positive bias where appropriate and putting humans at the center so models don’t harm vulnerable people.”

Alignment, Explainability, Cybersecurity

Avivah Litan, distinguished VP analyst at Gartner, comments on risk, legal and compliance challenges emerging in such areas as:

- Aligning models with human goals – “Regulators should set a timeframe by which AI model vendors can ensure their GenAI [generative AI] models have been trained to incorporate pre-agreed-upon goals and directives that align with human values. They should make sure AI continues to serve humans instead of the other way around.”

- Explainability and transparency – “Even the vendors don’t understand everything about how [GenAI models] work internally. At a minimum, enterprises require attestations regarding the data used to train themodel, e.g., regarding the use of sensitive confidential data” such as personally identifiable information or company business plans.”

- Disinformation, malinformation, misinformation – “This includes fake news that polarizes societies, undermines fair elections and democracies, causes personal harm, social unrest and other injuries. Enterprises should push regulators and vendors to set timeframes by which AI model vendors must use standards to authenticate provenance of content, software and other digital assets used in their systems.”

- Accuracy – “GenAI systems consistently produce inaccurate and fabricated answers called hallucinations. Enterprises and regulators must ensure that AI vendors provide users with the capability to assess outputs for accuracy, appropriateness and safety so that, for example, they can distinguish made-up facts from real ones.”

- Intellectual property and copyright – “There are currently no verifiable data governance and protection assurances regarding confidential or protected enterprise information. Enterprises and regulators should enforce: (1) Controls whereby AI vendors identify all copyrighted materials that are used in model operations (the EU AI Act is proposing such a rule); and (2) privacy and confidentiality assurances that AI vendors give to any constituent asking for them to ensure private data is not retained in the model environment.”

- Threat intelligence sharing – “Enterprises should seek out and participate in international agencies that share threat intelligence on malicious actors’ use of AI to inflict harm on targets. Once threats are detected, technical measures should be put in place to block or disarm the threats.”

Markets Are More Susceptible

The range of operational, moral, economic and legal questions, along with AI’s potential to compound risks instead of mitigating them, puts the financial services industry in uncharted territory, states Thomas Vartanian, executive director, Financial Technology & Cybersecurity Center.

Thomas Vartanian: Balance benefits against the threats.

The longtime financial industry attorney and ex-regulatory agency official sees red flags in the resource and technical limitations that make it difficult for regulators to keep pace and take actions in real time; the inability of AI applications to meet consumer protection and model explainability needs; and inaccuracies in AI programming that may impact compliance and trading oversight.

“Increasing security threats facilitated by the use of AI can make government processes, payments systems, trading markets and exchanges more susceptible to misuse, abuse, attack and manipulation,” warns Vartanian, whose most recent book is The Unhackable Internet: How Rebuilding Cyberspace Can Create Real Security and Prevent Financial Collapse.

“We all certainly benefit from what AI can provide,” Vartanian says. “But the benefits must be balanced against the threats that can emerge to economies, people and companies by embracing technology for technology’s sake without a legal and moral framework in place. We should not suffocate innovation, but even more importantly, we should not blindly cede our futures to machines controlled by people who may be largely unaccountable.”

Topics: Responsible & Ethical